引言 Introduction

一、制度创新:全链条治理的三维突破 I.Institutional Innovation: A Three-Dimensional Breakthrough in Full-Chain Governance 《办法》通过构建“权责闭环-技术规范-执行机制”三位一体的治理框架,系统性破解生成式AI带来的内容溯源与责任认定难题。该制度创新体现为三大核心设计:将全链条参与主体纳入精细化管理、建立多模态适配的技术标准体系和引入动态分级的监管策略,既确保技术中立性与商业可行性平衡,又通过区块链存证与智能评估实现法律追责穿透力,这也是我国AI治理从原则性规范向操作性规则的实质性跨越。 1. 责任主体精细切割 首次将服务提供者、传播平台、用户三方纳入全链条责任体系。服务提供者需在生成环节嵌入显式标识(如文本“AI”角标高度≥5%、音频摩斯码节奏标识),并在元数据中写入服务商编码等隐式标识;传播平台需核验元数据并添加风险提示标签(如“AI生成”“可能为生成”),突破传统避风港原则;用户发布内容需主动声明并禁止恶意删除标识,形成从生成到传播的闭环管理。例如,短视频平台需开发元数据核验系统,自动识别未合规内容并触发风险提示,用户若违规将面临账号封禁或法律责任。 2. 技术标准场景适配 针对文本、音频、视频等不同模态制定差异化规则。文本需任选在首尾等位置添加“AI”“生成/合成”文字或角标;音频起始位置插入摩斯码节奏标识(“短长 短短”节奏);视频内容显式标识的文字高度不应低于画面最短边长度的5%。,在视频正常播放速度下,视频内容显式标识持续时间不应少于2s等。隐式标识通过在文件数据中嵌入企业专属编号和唯一识别码(如SHA-256算法生成),即使图片经过50%以上的压缩处理,这些隐藏标记仍能保留,确保内容来源可追溯,为法律取证提供技术支撑。 3. 梯度合规与动态监管 《办法》创新采用“分阶段合规+智能评估”的双轨执行机制,兼顾监管刚性与行业适应性。制度设置过渡期,允许中小企业分阶段落实技术标准——初创企业初期仅需完成基础标识要求(如文本末尾添加“AI”标识),逐步向元数据管理等高级要求过渡;而大型平台需在过渡期内完成全技术参数改造(如视频动态水印、音频摩斯码标识等),确保技术合规性。同时构建动态评估系统,通过机器学习算法实时监测标识质量与内容风险,对未达标内容自动触发平台二次核验,高风险内容则由区块链存证系统快速溯源。司法实践中,已有案例通过隐式标识中的服务商编码同步追责生成工具开发商与传播平台,突破传统“技术中立”抗辩逻辑,强化责任穿透力。这一机制既降低中小企业的合规成本,又通过智能监管提升治理效率,形成技术标准与法律追责的闭环。 The “Measures” establish an integrated governance framework comprising “responsibility loop closure, technical standards, and enforcement mechanisms”, systematically solving challenges related to content traceability and accountability in generative AI. This institutional innovation introduces three core designs: 1. Refined classification of responsible entities, ensuring comprehensive management of all stakeholders. 2. A multi-modal adaptive technical standards system, tailored for various AI-generated content formats. 3. A dynamic, tiered regulatory strategy, balancing technological neutrality with commercial feasibility while leveraging blockchain evidence storage and intelligent assessments for legal accountability. This represents a significant leap from principle-based regulation to operational rules in China’s AI governance. 1. Refined Allocation of Responsibility For the first time, service providers, dissemination platforms, and users are all included in a full-chain responsibility system. Service providers must embed explicit labels (e.g., "AI" corner text with a height ≥5%, or Morse code rhythm identifiers for audio content) and write service provider codes and other implicit labels in metadata. Dissemination platforms are required to verify metadata and add risk warning labels (e.g., "AI-generated" or "Possibly AI-generated"), breaking with the traditional "safe harbor" principle. Users must proactively declare AI-generated content and are prohibited from maliciously removing labels, forming a closed-loop management system from generation to dissemination. For example, short video platforms must develop metadata verification systems to automatically identify non-compliant content and trigger risk warnings, with violators facing account suspension or legal liabilities. 2. Technical Standard Adaptation Across Modalities Differentiated rules have been developed for text, audio, and video modalities. Text content must include "AI" or "Generated/Synthetic" labels at the beginning or end. Audio must insert Morse code rhythm markers (e.g., "short-long-short-short") at the start. Video content requires explicit labels with a text height no less than 5% of the shortest side of the frame and a duration of at least 2 seconds at normal playback speed. Implicit labels, such as enterprise-specific codes and unique identifiers generated using algorithms like SHA-256, are embedded in file data to ensure traceability even after 50% compression, providing technical support for legal evidence collection. 3. Hierarchical Compliance and Dynamic Regulation The "Measures" adopt an innovative "phased compliance + intelligent assessment" dual-track enforcement mechanism, balancing regulatory rigidity and industry adaptability. A transition period allows small and medium-sized enterprises (SMEs) to phase in technical standards—initially meeting basic labeling requirements (e.g., the addition of "AI" labels at the end of text)—before gradually advancing to higher requirements such as metadata management. Larger platforms must complete full technical parameter upgrades (e.g., dynamic video watermarks and Morse code markers for audio) within the transition period to ensure compliance. A dynamic evaluation system, powered by machine learning algorithms, monitors labeling quality and content risks in real time. Non-compliant content triggers secondary verification by the platform, while high-risk content is rapidly traced using blockchain. Judicial practices have already leveraged implicit labels to hold both generative tool developers and dissemination platforms accountable, breaking traditional "technological neutrality" defense arguments and enhancing accountability. This mechanism reduces compliance costs for SMEs, improves governance efficiency through intelligent regulation, and creates a closed-loop system of technical standards and legal accountability.

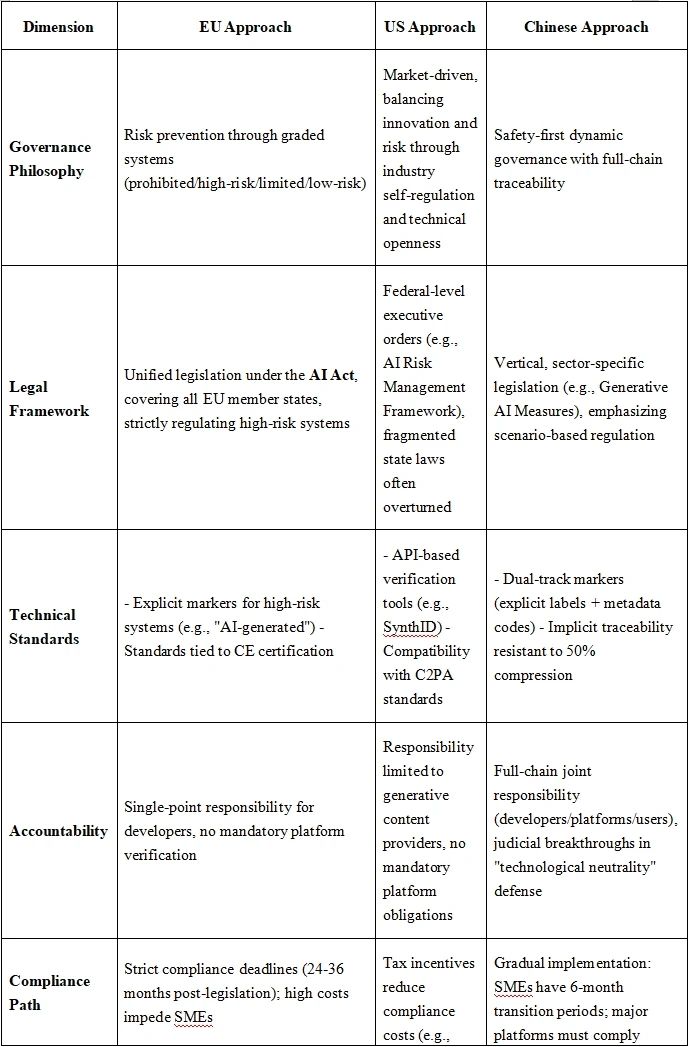

二、国际比较:中国方案的差异化竞争力 II. International Comparison: The Competitive Edge of China’s Approach 当前国际上AI生成内容治理呈现三大差异化路径:欧盟以风险预防为核心构建统一立法框架,美国依托市场驱动形成分散化监管体系,中国通过行政主导实现全链条动态治理。三者在治理理念、法律框架、技术标准等维度存在显著差异,既反映文化差异,也映射出对技术创新与公共安全的不同平衡逻辑。本表基于2024-2025年最新政策动态与司法实践,系统对比中欧美治理方案的共性与特性。 由以上对比可以看出,欧盟以风险预防为核心,通过《人工智能法案》构建统一风险分级框架,严控高风险场景(如生物识别),但其高合规成本与技术迭代滞后可能抑制创新;美国依托市场驱动原则,以联邦行政令和税收激励推动行业自律与技术开放,但松散的责任机制导致溯源盲区,且州立法分散削弱执行力;中国通过纵向分领域立法建立全链条连带责任,以双轨标识和区块链溯源强化安全可控,但技术标准国际兼容性仍需提升。三者治理路径根植于历史传统——欧盟重法律本位,美国坚持产业优先,中国强调行政主导。AI生成内容治理未来将要面对的挑战或许在于平衡全球共识与技术割裂,以应对地域博弈带来的标准竞争与算力壁垒。 Currently, the governance of AI-generated content globally follows three differentiated pathways: the EU emphasizes preventive risk management through a unified legislative framework, the US relies on market-driven and decentralized regulatory systems, and China implements full-chain dynamic governance through administrative leadership. These approaches differ significantly in governance philosophy, legal frameworks, and technical standards, reflecting both cultural differences and distinct balances between technological innovation and public safety. Below is a comparison of the latest policies and judicial practices from 2024-2025: From this comparison, the EU prioritizes risk prevention through the AI Act, imposing strict controls on high-risk scenarios (e.g., biometric identification). However, its high compliance costs and slow technical iterations may stifle innovation. The US relies on market-driven principles, using federal executive orders and tax incentives to promote industry self-regulation and technological openness, but its loose accountability structure creates traceability blind spots, and fragmented state laws weaken enforcement. China, by establishing vertical, sector-specific legislation and full-chain joint responsibility, emphasizes safety and control through dual-track markers and blockchain traceability, though international compatibility of technical standards remains an area for improvement. These governance paths reflect historical traditions: the EU's law-centric approach, the US's industry-first principle, and China's administrative leadership. The future challenge for AI-generated content governance lies in balancing global consensus with technological fragmentation to address standard competition and computational power barriers arising from regional conflicts.

三、企业合规实施:全周期风险管理路径 III. Corporate Compliance Strategy: A Full-Cycle Risk Management Approach 结合当前监管政策与市场环境,为应对企业全周期运营中的多元风险挑战,建议企业多维度构建系统性风险防控体系。企业全周期风险管理需以数据治理、算法合规与动态监控为核心,构建覆盖事前预防、事中控制、事后追溯的闭环体系。通过数据全生命周期可追溯性设计(如生物信息加密存储、区块链存证)夯实合规基础,依托算法透明化及责任界定机制防范法律风险,并借助实时风险监测与分级响应体系实现动态管控。以下为具体实施路径: 1. 数据治理夯实合规基础规避源头风险 在数据驱动的业务环境下,企业应建立全面的数据合规管理体系,确保数据的合法收集、存储和使用,以降低法律风险并符合监管要求。首先,企业应优先建立私有化数据资源池,并在数据处理过程中采取合理的合规措施。例如,训练数据应经过分阶段脱敏处理,特别是涉及生物信息的数据,可采用加密存储技术,减少敏感数据泄露风险。同时,可借助区块链技术进行数据存证,确保数据来源可追溯,满足合规要求。其次,在涉及个人信息的数据处理中,企业需严格遵守《个人信息保护法》等法律法规。例如,在AI客服系统中,企业应通过声纹剥离和语义模糊化双重处理,降低身份识别风险,确保不会过度收集或滥用用户个人信息。此外,企业应定期审查数据收集与使用流程,确保符合法律法规要求,并防范“数据投毒”、隐私泄露等潜在合规风险。同时,企业应建立数据合规审查和应急响应机制,在发生数据安全事件时,能够迅速采取措施降低法律风险。例如,可制定数据访问权限管理制度,定期对数据处理流程进行审计,并在发现数据违规使用时,及时采取补救措施,确保企业的数据治理体系符合监管要求。 2. 算法合规与法律责任防范监管风险 在人工智能应用中,算法的决策透明性和责任界定是企业合规管理的关键。企业在使用AI系统时,应确保算法符合现行法律法规,并采取有效措施防范法律风险。首先,在合同和用户协议中明确算法使用范围及责任划分。例如,在智能法律咨询、自动化合同审核等场景下,企业可在免责声明中说明AI的辅助性质,避免因算法误判导致的法律责任纠纷。其次,企业应建立内部合规审查机制,定期评估AI模型的合规性。例如,涉及消费者权益保护的算法,应符合《消费者权益保护法》和《电子商务法》的要求,确保算法不会引发价格歧视、数据滥用等问题。此外,AI系统的决策逻辑应具备可解释性,确保相关方在出现争议时能够追溯算法决策过程。企业可通过提供决策记录或设置人工复核机制,提高合规透明度。例如,在智能合同审核系统中,应提供关键条款的风险提示,并允许用户进行人工确认,以减少合规风险。最后,企业应关注行业监管趋势,及时调整AI合规策略。针对高风险领域(如金融、医疗、法律),企业可与专业律师团队合作,制定针对性合规方案,确保算法应用符合行业监管要求,降低法律风险。 3. 动态监控与应急响应构建闭环管理体系 企业应建立完善的合规监测体系,确保AI系统在数据安全、内容生成和算法公平性方面符合法律法规要求。建议搭建合规指数仪表盘,实时追踪数据泄露风险、算法偏差等关键合规指标,并设立分级响应机制,以便及时应对潜在法律风险。具体而言,对于低风险事件(如标注缺失),企业可采取自动补标等技术手段进行修正;对于中风险事件(如版权争议),建议在48小时内完成合规评估,并根据情况采取下架、修改或补充授权等措施;对于高风险事件(如深度伪造或违法内容生成),企业应立即暂停相关功能,并同步向监管部门报告,以降低法律责任。参考电商行业的实践,企业在AI系统的输入端可部署指令过滤机制,拦截涉及违法或不正当竞争的指令(如“生成虚假促销话术”);在输出端,可对生成内容进行合规审查,自动标注风险标签,并利用区块链技术存证,确保内容可追溯,以备后续法律合规审查。此外,企业应持续关注最新法律法规动态,确保内部合规政策与监管要求同步更新。同时,建议定期开展合规自查,并与法律顾问合作,优化AI合规管理体系,以有效降低法律合规风险。 To address the diverse risk challenges in enterprises’ full-cycle operations under the current regulatory policies and market environment, it is recommended that enterprises construct a systematic risk prevention and control framework from multiple dimensions.Full-cycle risk management should focus on data governance, algorithm compliance, and dynamic monitoring, forming a closed-loop system covering pre-event prevention, mid-event control, and post-event traceability. 1. Data Governance: Laying a Compliance Foundation to Avoid Source Risks In a data-driven business environment, enterprises should establish comprehensive data compliance management systems to ensure lawful data collection, storage, and usage, thereby reducing legal risks and meeting regulatory requirements. Data Pooling and Protection: Enterprises should prioritize creating private data resource pools and adopt phased de-identification for sensitive data, particularly biological information, using encryption technologies to minimize data leakage risks. Blockchain technology can be leveraged for data evidence preservation, ensuring data traceability and compliance. Personal Data Processing: Strict adherence to laws such as the Personal Information Protection Law is essential. For instance, in AI customer service systems, enterprises can mitigate identity recognition risks by employing dual-layer protections such as voiceprint stripping and semantic obfuscation, avoiding excessive collection or misuse of personal data. Review and Response Mechanisms: Enterprises should regularly audit data collection and usage processes to ensure compliance, and establish emergency response mechanisms to promptly address data security incidents. For example, implementing data access control policies and conducting routine audits can mitigate risks of "data poisoning" or privacy breaches. 2. Algorithm Compliance and Legal Risk Mitigation Algorithm transparency and accountability are central to compliance management in AI applications. Contractual Clarity: Clearly define algorithm usage scope and responsibilities in contracts and user agreements. For example, in legal AI applications like smart contract reviews, disclaimers can state the AI's auxiliary nature to avoid liability disputes arising from algorithmic errors. Internal Audits: Regularly evaluate algorithm compliance, particularly in consumer rights-related applications, to ensure alignment with laws such as the Consumer Protection Law and E-Commerce Law, thus preventing issues like price discrimination or data misuse. Explainability: Ensure that AI systems' decision-making processes are explainable. For instance, smart contract review systems should provide clear risk alerts for key clauses and allow for manual review to enhance compliance transparency. Regulatory Trends: Monitor regulatory trends in high-risk areas (e.g., finance, healthcare, legal) and collaborate with legal experts to develop targeted compliance strategies. 3. Dynamic Monitoring and Emergency Response: Building a Closed-Loop Management System Enterprises should establish comprehensive compliance monitoring systems to ensure AI systems meet legal and regulatory requirements in areas like data security, content generation, and algorithmic fairness. Real-Time Monitoring: Implement compliance dashboards to track key risks such as data breaches or algorithmic bias. For low-risk events (e.g., missing labels), automated correction technologies can be used. For medium-risk events (e.g., copyright disputes), compliance evaluations should be conducted within 48 hours, with appropriate measures such as takedowns or licensing adjustments. For high-risk events (e.g., deepfakes or illegal content), immediate suspension of related functions and reporting to regulators are necessary. Filtering Mechanisms: Deploy input filters to block illegal or unethical prompts, and conduct compliance reviews on output content, using blockchain for traceability to support legal audits. Regulatory Updates: Stay informed about legal and regulatory changes to ensure internal compliance policies remain up-to-date. Conduct regular self-assessments and collaborate with legal advisors to optimize AI compliance strategies.

结 语 Conclusion 在生成式人工智能技术快速发展的背景下,全球范围内的监管体系正在加速演进,以应对AI内容生成带来的法律与合规挑战。中国的《人工智能生成合成内容标识办法》及配套标准,凭借全链条治理、显隐双轨标识和动态监管机制,在国际竞争格局中形成了独特的合规模式。对于企业而言,建立完善的数据治理体系、明确算法合规责任、构建实时监测与应急响应机制,是降低法律风险、确保技术可持续发展的关键路径。未来,随着国际标准的趋同与技术监管的深化,企业需持续关注法律动态,优化自身合规体系,在确保创新发展的同时,稳步推进合规落地,以在全球AI治理体系中占据有利位置。 As generative AI technology advances rapidly, global regulatory frameworks are evolving to address the legal and compliance challenges posed by AI-generated content. China's Measures and supporting standards, with their full-chain governance, dual-track markers, and dynamic regulation mechanisms, offer a unique compliance model. For enterprises, establishing robust data governance systems, clarifying algorithmic accountability, and building real-time monitoring and emergency response mechanisms are critical for mitigating legal risks and ensuring sustainable technological development. As international standards converge and regulatory oversight deepens, enterprises must continuously refine their compliance systems to secure a competitive position in the global AI governance landscape.

文 章 作 者 吕品一 上海中岛律师事务所 律师 吉林大学法学硕士 专业领域:商事争议诉讼与仲裁;公司综合;人力资源与劳动人事;TMT与数据合规 工作微信:babababe_

电话:(021)80379999

邮箱:liubin@ilandlaw.com

地址:上海市浦东新区银城中路68号时代金融中心27层

加入我们:liubin@ilandlaw.com

中岛微信公众号

中岛微信公众号